A collaborative IDE designed for test-driven development

Athina helps AI teams experiment with data and build powerful AI applications 10x faster.

Athina allows you to experiment with your data in a powerful editor that feels like a spreadsheet.

Powerful AI products are a team effort.

Empower non-technical users to prototype, experiment, and run tests.

Collaborate seamlessly with technical team members to run more complex experiments programmatically.

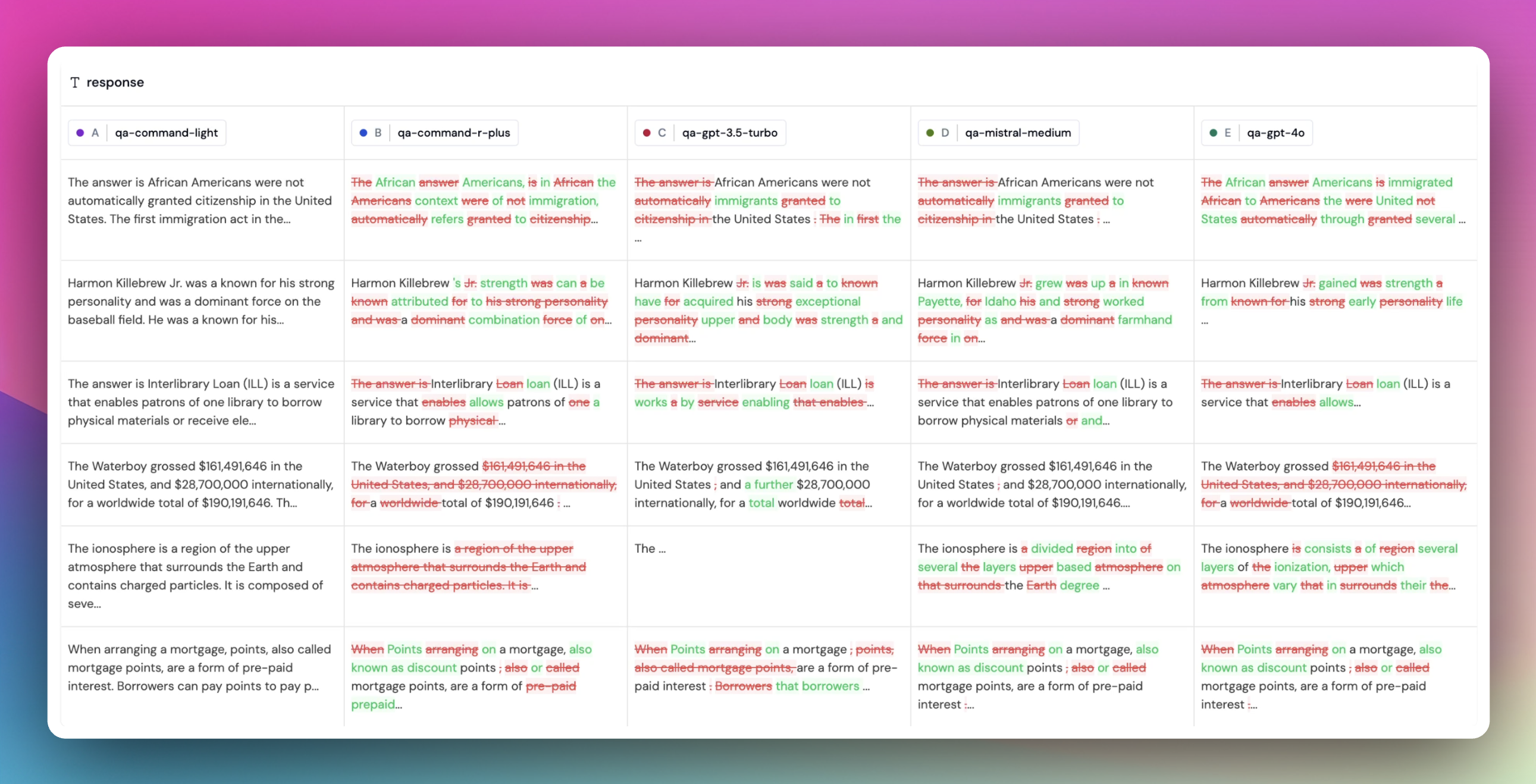

Compareeverythingside-by-side

Athina is designed to help you compare data manually, as well as programmatically.

Run evaluations on a dataset in seconds

Evaluate your model on your entire dataset in just a few clicks with Athina.

Learn MoreRagas Faithfulness

247 failed

Context Relevancy

68 failed

No PII in response

95 failed

No financial figures

190 failed

Prompt Injections

19 failed

Integrates with your code seamlessly

You can use Athina to experiment without writing a single line of code.

...but where's the fun in that?

In just a few lines, Athina datasets can seamlessly integrate with your codebase so you can run experiments, read to and write from Athina datasets programmatically.

import athina

from athina.evals import DoesResponseAnswerQuery, ContextContainsEnoughInformation, Faithfulness

eval_model = "gpt-4o"

eval_suite = [

DoesResponseAnswerQuery(model=eval_model),

Faithfulness(model=eval_model),

ContextContainsEnoughInformation(model=eval_model),

]

# Run the evaluation suite

athina.run(

evals=eval_suite,

data=dataset,

max_parallel_evals=10

)

Experiment without changing your stack

Integrated with the ecosystem tools you already use

Athina works with tools you already use like Langchain, LlamaIndex, Ragas, Guardrails, Azure OpenAI, AWS Bedrock, and more.

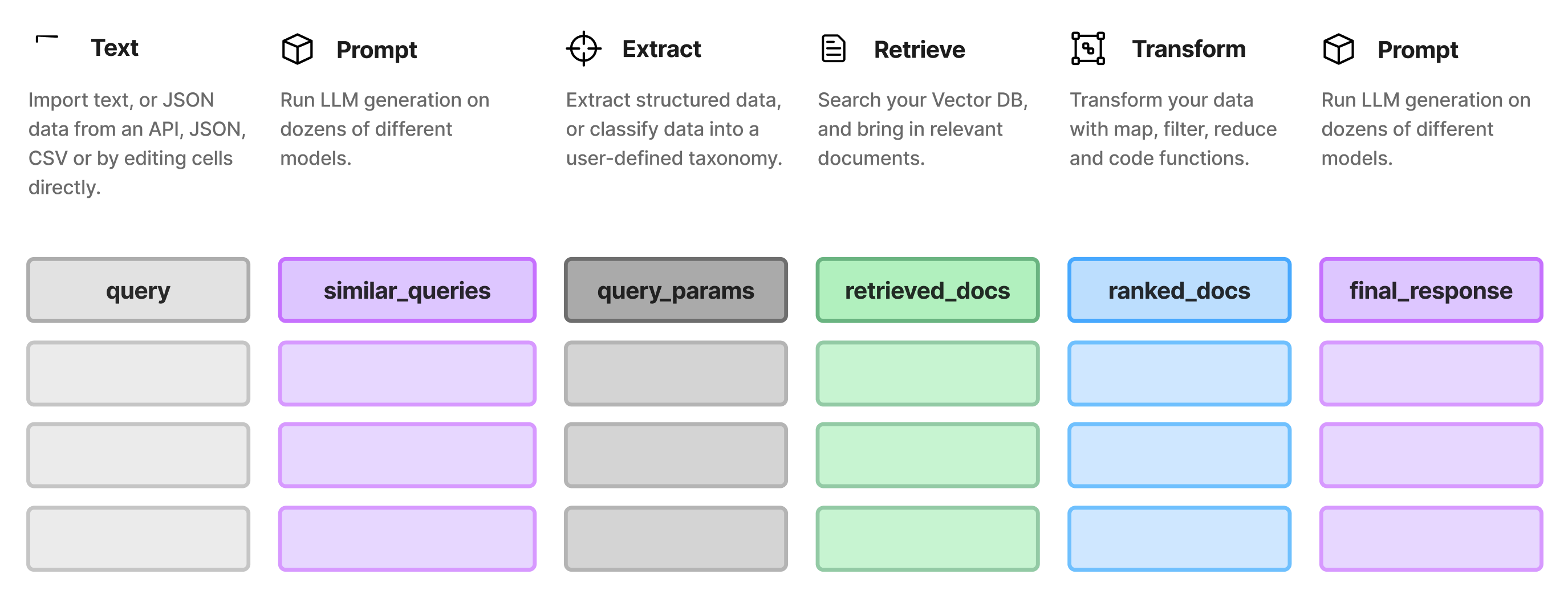

Workflows designed for developing LLM apps rapidly

A suite of tools tailored for rapid experimentation, evaluation, and iteration on your AI models.

Compare responses side-by-side

Analyze and compare the outputs of various models easily.

Experiment with prompts & models

Try out different prompts to see how they affect the model’s output.

Test different retrieval strategies

Adjust retrieval settings to fine-tune model performance.

Run batch evaluations in seconds

Evaluate model performance using your custom datasets.

Generate synthetic data

Create synthetic data to augment your training datasets.

Prototype data pipelines rapidly

Quickly build and test data processing pipelines.

Filter and transform data

Easily filter and transform your data for analysis.

Export data or access via code

Export your processed data in various formats.

Built for product teams to work faster together

Collaborate with the entire team

Both technical and non-technical members of your team can collaborate across the lifecycle of your AI development process.